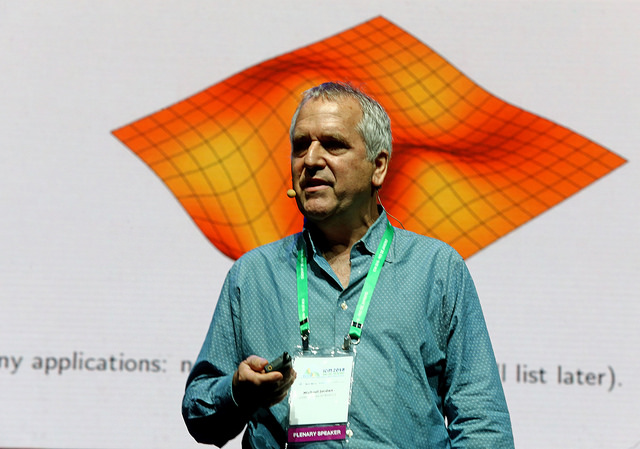

Often referred to as ‘machine learning’ or ‘artificial intelligence’, the field of ‘data engineering’ is an immature area that straddles statistics, physics, computer science and math. Before diving into his hour-long presentation, Jordan warned journalists in the audience against using generalized “buzzwords” because they fail to capture the general scope of the data challenge. Many problems have little to do with imitating human intelligence or understanding the brain, so it is better to look at existing and future data systems within transportation, medicine, and commerce, he said. Jordan suggested a wordier label that better presents the scale of the problem: “Algorithmic decision-making under uncertainty, in large-scale networks and markets.”

Math is emerging as a powerful tool in the data field, with many theorems already proven. The study of gradient flow and variational perspective on mechanics are historical threads that can be applied to the area. Berkeley colleagues are working with Jordan to build new connections, studying aspects of gradient-based optimization from a continuous-time variational point of view. His team are also examining second-order dynamics that yield quickly converging algorithms.

To conclude, Jordan reemphasized the importance of math tools in addressing real-world data-based problems. Despite some existing math applications for data analysis of real-world problems, Jordan admitted that the field is very immature.

A member of the National Academy of Sciences, the National Academy of Engineering and the American Academy of Arts and Sciences Jordan is a Fellow of the American Association for the Advancement of Science. Named both Neyman Lecturer and Medallion Lecturer by the Institute of Mathematical Statistics, he has won several awards for his academic endeavors. He won the IJCAI Research Excellence Award in 2016, the David E. Rumelhart Prize in 2015 and the ACM/AAAI Allen Newell Award in 2009. He is a Fellow of the AAAI, ACM, ASA, CSS, IEEE, IMS, ISBA and SIAM.